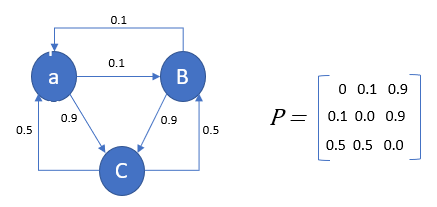

The probabilities associated with various state changes are called transition probabilities. The changes of state of the system are called transitions.

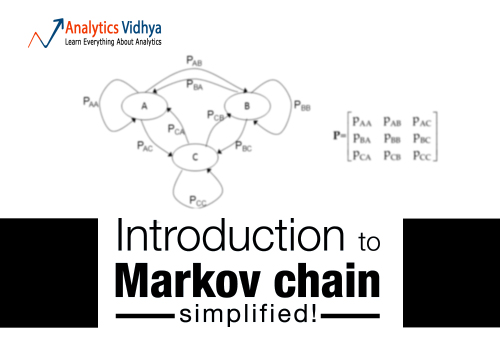

However, many applications of Markov chains employ finite or countably infinite state spaces, because they have a more straightforward statistical analysis.Ī Markov chain is represented using a probabilistic automaton (It only sounds complicated!).

While the time parameter is usually discrete, the state space of a discrete time Markov chain does not have any widely agreed upon restrictions, and rather refers to a process on an arbitrary state space. The state space can be anything: letters, numbers, basketball scores or weather conditions. The possible values of X i form a countable set S called the state space of the chain. Which means the knowledge of the previous state is all that is necessary to determine the probability distribution of the current state, satisfying the rule of conditional independence (or said other way: you only need to know the current state to determine the next state). Pr( X n+1 = x | X 1 = x 1, X 2 = x 2, …, X n = x n) = Pr( X n+1 = x | X n = x n)Īs you can see, the probability of X n+1 only depends on the probability of X n that precedes it. Putting this is mathematical probabilistic formula: with the Markov property, such that the probability of moving to the next state depends only on the present state and not on the previous states. A discrete time Markov chain is a sequence of random variables X 1, X 2, X 3. The steps are often thought of as moments in time (But you might as well refer to physical distance or any other discrete measurement). Usually the term "Markov chain" is reserved for a process with a discrete set of times, that is a Discrete Time Markov chain (DTMC).Ī discrete-time Markov chain involves a system which is in a certain state at each step, with the state changing randomly between steps. A Markov chain has either discrete state space (set of possible values of the random variables) or discrete index set (often representing time) - given the fact, many variations for a Markov chain exists. A random process or often called stochastic property is a mathematical object defined as a collection of random variables.

#MARKOV MODELS PYTHON TUTORIAL SIMULATOR#

Reddit's Subreddit Simulator is a fully-automated subreddit that generates random submissions and comments using markov chains, so cool!Ī Markov chain is a random process with the Markov property. The algorithm known as PageRank, which was originally proposed for the internet search engine Google, is based on a Markov process. When it comes real-world problems, they are used to postulate solutions to study cruise control systems in motor vehicles, queues or lines of customers arriving at an airport, exchange rates of currencies, etc. They arise broadly in statistical specially Bayesian statistics and information-theoretical contexts. They are widely employed in economics, game theory, communication theory, genetics and finance.

Markov Chains have prolific usage in mathematics. Want to tackle more statistics topics with Python? Check out DataCamp's Statistical Thinking in Python course!

0 kommentar(er)

0 kommentar(er)